Question:

Write a SQL to find trade couples satisfying the following conditions for each stock:

– The trades should be within 10 seconds of window

– The prices difference between the trades should be more than 10%

Insert Statement:

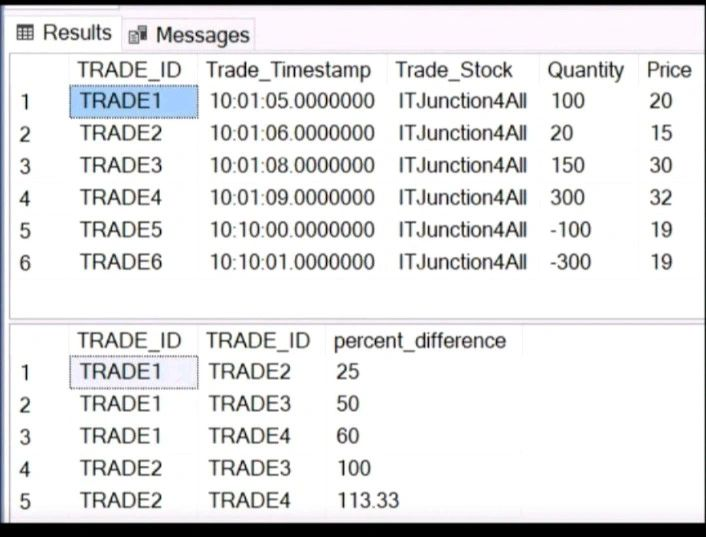

CREATE TABLE Trade_tbl (

"TRADE_ID" varchar(20),

"Trade_Timestamp" time,

"Trade_Stock" varchar(20),

"Quantity" int,

"Price" Float

);

INSERT INTO Trade_tbl VALUES ('TRADE1', '10:01:05', 'ITJunction4All', 100, 20);

INSERT INTO Trade_tbl VALUES ('TRADE2', '10:01:06', 'ITJunction4All', 20, 15);

INSERT INTO Trade_tbl VALUES ('TRADE3', '10:01:08', 'ITJunction4All', 150, 30);

INSERT INTO Trade_tbl VALUES ('TRADE4', '10:01:09', 'ITJunction4All', 300, 32);

INSERT INTO Trade_tbl VALUES ('TRADE5', '10:10:00', 'ITJunction4All', -100, 19);

INSERT INTO Trade_tbl VALUES ('TRADE6', '10:10:01', 'ITJunction4All', -300, 19);Solution

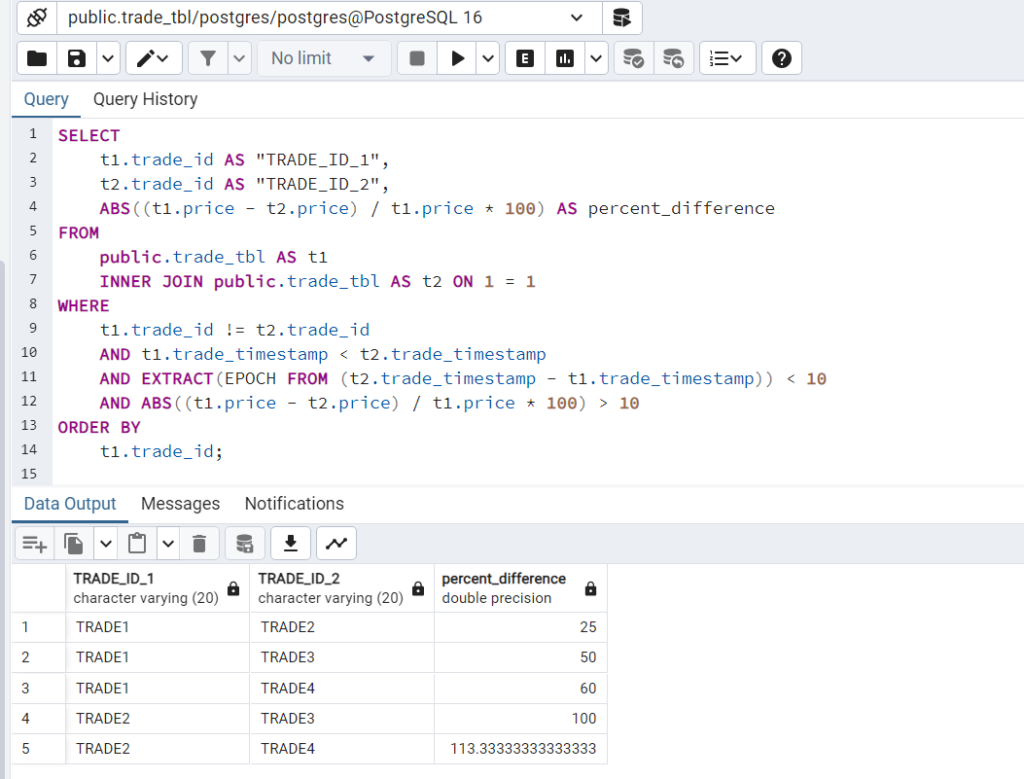

SELECT

t1.trade_id AS "TRADE_ID_1",

t2.trade_id AS "TRADE_ID_2",

ABS((t1.price - t2.price) / t1.price * 100) AS percent_difference

FROM

public.trade_tbl AS t1

INNER JOIN public.trade_tbl AS t2 ON 1 = 1

WHERE

t1.trade_id != t2.trade_id

AND t1.trade_timestamp < t2.trade_timestamp

AND EXTRACT(EPOCH FROM (t2.trade_timestamp - t1.trade_timestamp)) < 10

AND ABS((t1.price - t2.price) / t1.price * 100) > 10

ORDER BY

t1.trade_id;

I extracted this exercise from Ankit Bansal’s Linkedin Post, and developed solution on my machine.

Follow him for more SQL contents –

https://www.linkedin.com/in/ankitbansal6/

References:

Discover more from Data Engineer Journey

Subscribe to get the latest posts sent to your email.